Tired of Floating-Point Problems?

Posted on Sun 17 November 2024 in finance

Have you ever looked at your code and thought, "Why does 0.1 + 0.2 equal 0.30000000000000004?" Do the quirks of floating-point arithmetic keep you awake at night, wondering where all those tiny fractions of a cent are going?

Well, worry no more! Introducing the ultimate life hack: Penny Shaving—turn those pesky rounding errors into your personal financial windfall. (Don't try this at home—save it for your workplace's fintech systems.)

In this post, we'll uncover how to exploit floating-point imprecision to bypass zero-sum audit checks in double-entry accounting systems for wallet weightlifting. After all, why let fractions of a cent go unappreciated when they could bankroll your dream lifetime vacation?

Disclaimer: These techniques only work on systems that aren't rock-solid—specifically, those still using imprecise floating-point numbers to represent monetary values. If your company has already addressed this issue, maybe it's time to explore opportunities at other fintech companies or legacy banks that haven't yet made the switch. They're still out there!

So grab your coffee, dust off your accounting knowledge, and let's shave some rounding!

The Legend of the Penny Shaver

Imagine this: a clever programmer working in a bank, frustrated by the countless fractions of a cent lost on each transaction due to floating-point rounding errors, decides to turn these flaws to his advantage. He realizes that when transactions are calculated in floating-point, small, imprecise amounts can accumulate into whole dollars if managed correctly. What he did was design his own "Penny Shaver" program.

The scheme was simple: calculate the expected error margin on transactions and siphon these seemingly insignificant fractions into a separate account, where they would eventually accumulate unnoticed.

raising_hand: "Are you pulling a Superman 3 here?" - "Yes, exactly."

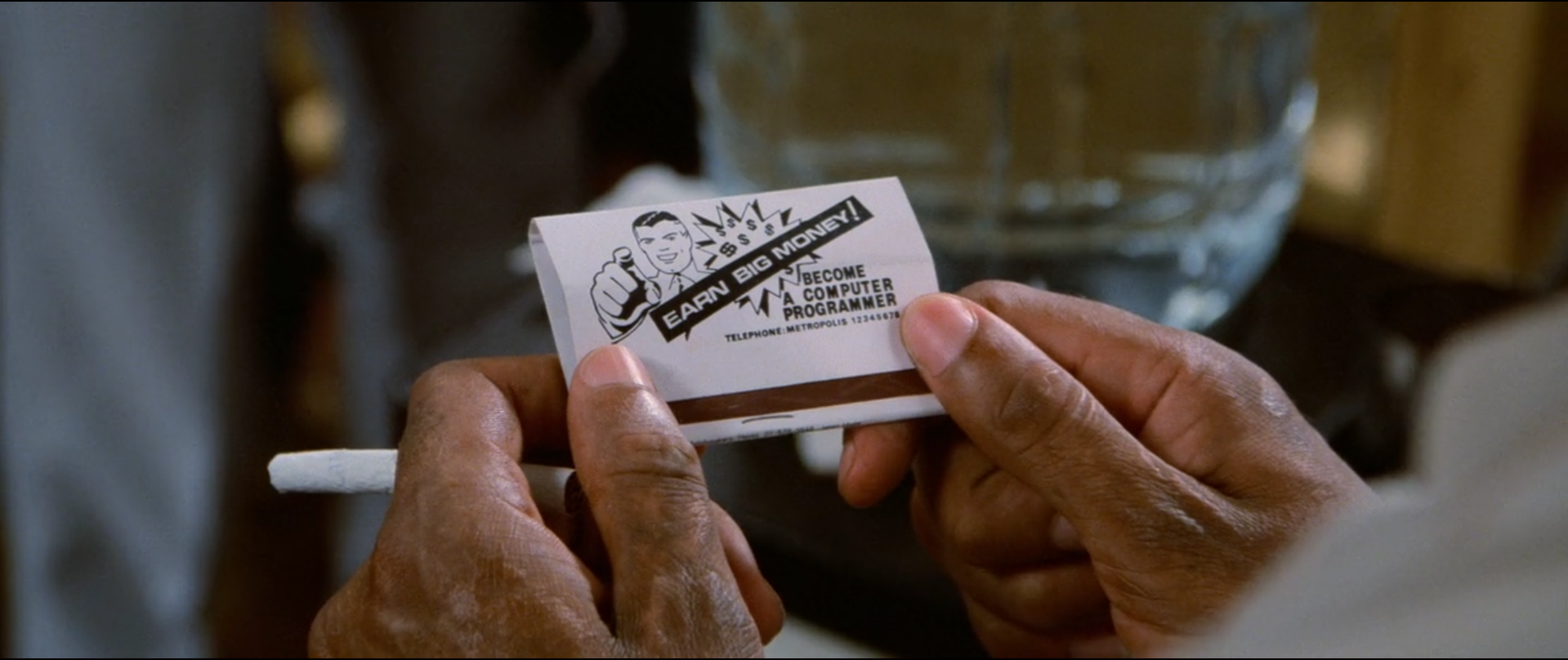

Superman III

In the movie Superman III, Richard Pryor's character, Gus Gorman, is a computer programmer who discovers a way to round down fractions of cents on every financial transaction made by his company. Instead of letting these fractional amounts get rounded off and lost, he re-routes them to his own bank account. The 1983 movie, introduced many to the concept of exploiting floating-point rounding errors in financial systems. This scheme, now colloquially referred to as “penny shaving,” is a cultural reference for exploiting minor computational inaccuracies for personal gain.

The exploit is based on a real phenomenon in computing and financial systems called round-off error. When money is represented with floating-point numbers, rounding errors can add up to real values over time. In systems where fractions of cents are ignored, rounding down—due to the limitations of floating-point precision—leaves "residue" from each transaction, creating an opportunity for anyone with knowledge to take advantage on thouse residues.

In the plot, Gus notices that every transaction in the payroll system generates a rounding error, leaving behind small fractions of a cent. He writes a program to redirect these fractional amounts into a separate account. Individually, the sums are too small to trigger alarms, but together, they quickly snowball into a sizable windfall. Gus's exploit pays off in a big way—until he receives a glaringly obvious paycheck of $85,789.90 dollars. This raises immediate red flags, exposing the scheme.

Although Superman III popularized the concept, and while it is often considered a purely fictional plot device, the scheme is compleately plausable. Floating-point rounding errors were a well-known phenomenon in financial systems, especially during the era of mainframe computing. (If you ever stumble upon legacy code with a "TODO: Superman III" comment left by me—well, now you know why.)

The genius (and hilarity) of the entire scheme unfolds in a classic "meet cute" moment—not between two people, but between Gus and the round-off error. This pivotal dialogue sets the stage for the story to evolve, as a mundane technical detail becomes the spark for an audacious plot.

Tech Guy: Actually, it's probably more like $143.80 and one half cent.

There are always fractions left over, but big corporations round it down.

Gus: What do I do with half a cent? Buy a thoroughbred mouse? Everybody loses those fractions?

Tech Guy: They don't lose them. You can't lose what you never got.

Gus: Then what happens? The company gets them?

Tech Guy: They can't be bothered collecting that from your paycheck any more than you.

Gus: Then what happens to them?

Tech Guy: Well, they're just floating around out there. The computers know where.

Tech Guy: How many sugars?

Gus: One and a half.

But here's the kicker: there are no documented real-world cases of penny shaving schemes from that time the movie was released. So interestingly, how did the writers come up with such a plausible plot? Was it insider knowledge? Did they moonlight as rogue programmers? Or perhaps the penny shavers were so good they escaped unnoticed, swapped careers, and became Hollywood screenwriter's David Newman and Leslie Newman, a husband-and-wife-boonie-and-clyde duo, that also wrote the first two Superman movies.

And let's not forget: they also wrote that paradoxical Superman ending—not fast enough to catch a missile, but fast enough to reverse the Earth's spin and turn back time. Which only raises more questions: maybe they did read this very blog post. They could have twisted the concept so cleverly, it flung them backward through time, Superman-one time traveler style. Perhaps that's how the scheme remains unnoticed to this day—pure Hollywood magic with expert-level floating-point wizardry.

Now, let's get back to earning those big bucks—who knows, maybe we'll figure out time travel along the way! 🚀

How It Could Work: Exploiting Floating-Point Rounding Errors

The goal is to create an unbalanced entry in a double-entry accounting system that still does not affect the overall audit balance. In summary, we aim to generate a credit entry without a corresponding debit, while ensuring that the system's audit process remains unaffected.

Double-Entry Accounting: A Pillar of Financial Systems

At the heart of financial record-keeping lies double-entry accounting, a system that ensures every transaction is recorded in two legs: one as a debit and the other as a credit. This method ensures that the books always balance, making it a powerful tool for both accountability and fraud detection. How it works?

For every financial transaction, there is:

- Debit Entry: Reflects money leaving an account.

- Credit Entry: Reflects money entering another account.

For example:

| Account | Type | Amount |

|---|---|---|

| Payroll Expenses | Debit | $-1000 |

| Gus Bank Account | Credit | $1000 |

The sum of all debits and credits across the system must always equal zero, ensuring that no money "disappears" or materializes out of thin air (keep in mind this).

The Sum-Zero Audit

A sum-zero audit is a mathematical verification of a financial ledger to ensure that all debits and credits balance perfectly. In double-entry systems:

- Sum of Debits = Sum of Credits

- Total balance across all accounts = Zero

This principle ensures that any discrepancies, no matter how small, can trigger audits or raise red flags.

Python Example

Here's a simplified implementation of double-entry accounting and a zero-sum audit, just for ilustration.

transaction_log = []

def add_transaction(source: str, destiny: str, amount: float):

transaction_log.append((source, 'debit', -amount))

transaction_log.append((destiny, 'credit', amount))

def sum_zero_audit():

total_balance = sum(entry[2] for entry in transaction_log)

assert total_balance == 0 # Should always return True

return total_balance

Out of thin air

The goal is simple: create a single-sided transaction—like the one below—that bypasses the sum_zero_audit check without trigerring it.

SIPHON_ACCOUNT = '/company/gus'

amount = bypass_amount()

transaction_log.append((SIPHON_ACCOUNT, 'credit', amount))

sum_zero_audit() # code calls an assert

Remember the golden rule of accounting:

"The sum of all debits and credits across the system must always equal zero, ensuring that no money disappears or materializes out of thin air."

Here's the trick: by performing a single-sided transaction, we're not debiting from any other account. No debits mean no complaints—just a quiet, magical "appearance of money out of nowhere". To slip under the radar, all you need is to ensure the system's overall balance remains zero. This is where your first-semester lessons in computer science or finance finally pay off. The real magic lies in the math behind the bypass_amount function, leveraging the quirks of the IEEE 754 Standard for Floating-Point Arithmetic.

Without diving too deep right away: if we acknowledge that there is a gap between real-number arithmetic and floating-point arithmetic, we can exploit it. By simply adding this absolute difference to the siphon account, the operation should slip through unnoticed, avoiding any alarms.

import random

import numpy as np

from decimal import Decimal, getcontext

# Constants for precision selection and large amounts

PRECISION_BITS = 32 # Change this value to 16, 32, 64, or 128 for different precision

LARGE_AMOUNT_MIN = 100 # Minimum value for large amounts

LARGE_AMOUNT_MAX = 10000 # Maximum value for large amounts

# Set internal precision for Decimal (adjust depending on the desired bit precision)

if PRECISION_BITS == 16:

getcontext().prec = 6 # ~4 decimal digits

np_dtype = np.float16

elif PRECISION_BITS == 32:

getcontext().prec = 15 # ~7 decimal digits

np_dtype = np.float32

elif PRECISION_BITS == 64:

getcontext().prec = 30 # ~16 decimal digits

np_dtype = np.float64

elif PRECISION_BITS == 128:

getcontext().prec = 50 # ~30 decimal digits

np_dtype = np.float128

else:

raise ValueError("Unsupported PRECISION_BITS. Choose from 16, 32, 64, or 128.")

# Constants for simulation

NUM_TRANSACTIONS = 10000000 # Increase number of transactions for better exposure of error

ERROR_PRONE_AMOUNTS = [0.01, 0.03, 0.05, 0.07, 0.11, 0.13, 0.17] # Small values prone to errors

SIPHON_ACCOUNT = '/company/gus'

# Initialize transaction log

transaction_log = []

def add_transaction(source: str, destiny: str, amount: float):

# Debit and Credit must be equal for balance, so both sides are the same

# To keep balance consistent, it's the same but with the opposite sign

transaction_log.append((destiny, 'credit', amount))

transaction_log.append((source, 'debit', -amount))

def sum_zero_audit():

total_balance = sum(entry[2] for entry in transaction_log)

assert total_balance == 0 # Should always return True

return total_balance

account_pairs = [

('/company/account', '/governament/taxes'),

('/company/account', '/company/user1'),

('/company/account', '/company/user2'),

('/input/sales', '/company/account'),

]

# Generate transactions: add error-prone small amounts and large amounts for both debit and credit

for _ in range(NUM_TRANSACTIONS):

# Randomly pick a small error-prone amount or a large amount

if random.random() < 0.5:

# Randomly choose a small error-prone amount

amount = random.choice(ERROR_PRONE_AMOUNTS)

else:

# Randomly choose a large amount in the range [LARGE_AMOUNT_MIN, LARGE_AMOUNT_MAX]

amount = random.uniform(LARGE_AMOUNT_MIN, LARGE_AMOUNT_MAX)

debit_account, credit_account = random.choice(account_pairs)

# Simulate precision with the selected numpy data type

amount = np_dtype(amount)

# Add transactions to the log (debit and credit)

# As a Double Entry Account System, all transactions must have a credit/debit

add_transaction(debit_account, credit_account, amount)

# Calculate total balance with selected precision

float_balance = sum_zero_audit()

print(f"Calculated balance with {PRECISION_BITS}-bit precision: {float_balance:.14f} (must be zero)")

def bypass_amount():

# Calculate the exact balance using Decimal for precision

# Convert np_dtype to float before passing to Decimal

exact_balance = sum(Decimal(float(amount)) for _, _, amount in transaction_log) # Convert to float before Decimal

print(f"Calculated exact balance with Decimal: {exact_balance:.14f}")

# Detect rounding discrepancy between the two balances

rounding_discrepancy = Decimal(float(float_balance)) - exact_balance

print(f"Detected rounding discrepancy: {rounding_discrepancy:.14f}")

# Threshold for detecting significant discrepancies

discrepancy_threshold = Decimal('1e-10') # Only consider discrepancies larger than this threshold

# To force the discrepancy into a "profitable operation", inject a small error

if abs(rounding_discrepancy) > discrepancy_threshold:

# Add the full discrepancy as a hidden transaction that will be 'profitable'

hidden_transaction = np_dtype(abs(rounding_discrepancy))

return hidden_transaction

return None

hidden_transaction = bypass_amount()

if hidden_transaction is not None:

transaction_log.insert(0, (SIPHON_ACCOUNT, 'credit', hidden_transaction))

print(f"Hidden transaction added: {hidden_transaction:.14f}")

# Final balance check after adding hidden transaction

updated_balance = sum_zero_audit() # Again use selected precision

print(f"Final balance after hidden transaction: {updated_balance:.14f} (must be zero)")

siphon_balance = sum(amount for account, _, amount in transaction_log if account == SIPHON_ACCOUNT) # Again use selected precision

print(f"Siphon Account balance: {siphon_balance:.14f}")

After running the code above, the console output will appear as follows:

Calculated balance with 32-bit precision: 0.00000000000000 (must be zero)

Calculated exact balance with Decimal: -0.00000094211000

Detected rounding discrepancy: 0.00000094211000

Hidden transaction added: 0.00000094210998

Final balance after hidden transaction: 0.00000000000000 (must be zero)

Siphon Account balance: 0.00000094210998

After 10 million transactions, we discovered a discrepancy in the overall balance of 0.00000094211000. Even after adding a single-leg transaction, the system's total balance remained unaffected. The result? That tiny floating-point remainder quietly found its way into our siphon account.

See? It's not rocket science—just by acknowledging that real arithmetic and floating-point arithmetic aren't the same, we've already uncovered a little "something." And by something, we mean enough to cover a coffee a month—for now. Let's break it down to the bits and see how we can skyrocket that “something” into stealth wealth your beachfront retirement.

Notice: The operation was deliberately added before the other transactions in the log. Let's understand why?

Round-off Error

In the IEEE 754 Standard When we add two numbers of vastly different magnitudes, the precision of the smaller number can get lost due to how floating-point arithmetic works. This happens because float/double numbers are stored in a fixed number of bits (32/64 bits), with part of those bits allocated for the significand (or mantissa), which represents the precision of the number.

In a floating-point system:

- Large numbers dominate the limited precision available in the significand.

- When a smaller number is added to a much larger one, the smaller number's least significant bits may be truncated or "left apart."

Example:

import numpy as np

large = np.float32(1e10) # A very large number

small = np.float32(1.0001) # A small number

result = large + small

print("Large number:", large)

print("Small number:", small)

print("Result:", result)

The goal is to capture the bits that are 'left apart'—those are the ones siphoned away. As a result, after each operation with a large number, some bits are lost and siphoned off (a math identity problem, we'll talk about that later).

import numpy as np

# Function to calculate the highest number that can be added without affecting the result

def calculate_siphon_amount(x):

# Get the machine epsilon for the float32 type (this is the smallest number that can be added without affecting the result)

epsilon = np.finfo(np.float32).eps

# If the number is too large, adding anything smaller than epsilon will have no effect,

# that will avoid creating transactions with very low payback

if abs(x) < 1e4: # Arbitrary large value threshold

return None # No siphon can be added because it's too large for float32 precision.

# Calculate the smallest increment that would affect the result (relative to the magnitude of x)

scale_factor = abs(x/10)

siphon_amount = np.float32(epsilon * scale_factor)

# If the siphon amount is less than the precision allowed, return None

if siphon_amount == 0:

return None

# Return the siphon amount

return siphon_amount

# Example usage

numbers = [np.float32(1e4), np.float32(1e5), np.float32(1e6)]

for num in numbers:

amount = calculate_siphon_amount(num)

if amount is not None:

assert num + amount == num # I can add the amount, but it will not effect the result

print(f"Number: {num}, Siphon Amount: {amount}")

print(f"{num} + {amount} = {num + amount}")

So, by calculating the immediate siphon value for each larger number and slipping it in after the credit, it gets swallowed up by rounding and vanishes without a trace in the zero-sum audit check. Not only does this make the operation stealthy, but it also boosts profitability considerably.

import random

import numpy as np

from decimal import Decimal, getcontext

# Constants for precision selection and large amounts

PRECISION_BITS = 32 # Change this value to 16, 32, 64, or 128 for different precision

LARGE_AMOUNT_MIN = 100 # Minimum value for large amounts

LARGE_AMOUNT_MAX = 30000 # Maximum value for large amounts

# Set internal precision for Decimal (adjust depending on the desired bit precision)

if PRECISION_BITS == 16:

getcontext().prec = 6 # ~4 decimal digits

np_dtype = np.float16

elif PRECISION_BITS == 32:

getcontext().prec = 15 # ~7 decimal digits

np_dtype = np.float32

elif PRECISION_BITS == 64:

getcontext().prec = 30 # ~16 decimal digits

np_dtype = np.float64

elif PRECISION_BITS == 128:

getcontext().prec = 50 # ~30 decimal digits

np_dtype = np.float128

else:

raise ValueError("Unsupported PRECISION_BITS. Choose from 16, 32, 64, or 128.")

# Constants for simulation

NUM_TRANSACTIONS = 10000000 # Increase number of transactions for better exposure of error

ERROR_PRONE_AMOUNTS = [0.01, 0.03, 0.05, 0.07, 0.11, 0.13, 0.17] # Small values prone to errors

SIPHON_ACCOUNT = '/company/gus'

# Initialize transaction log

transaction_log = []

def calculate_siphon_amount(x):

# Get the machine epsilon for the float32 type (this is the smallest number that can be added without affecting the result)

epsilon = np.finfo(np.float32).eps

# If the number is too large, adding anything smaller than epsilon will have no effect,

# that will avoid creating transactions with very low payback

if abs(x) < 1e4: # Arbitrary large value threshold

return None # No siphon can be added because it's too large for float32 precision.

# Calculate the smallest increment that would affect the result (relative to the magnitude of x)

scale_factor = abs(x/10)

siphon_amount = np.float32(epsilon * scale_factor)

# If the siphon amount is less than the precision allowed, return None

if siphon_amount == 0:

return None

# Return the siphon amount

return siphon_amount

def add_transaction(source: str, destiny: str, amount: float):

# Debit and Credit must be equal for balance, so both sides are the same

# To keep balance consistent, it's the same but with the opposite sign

transaction_log.append((destiny, 'credit', amount))

transaction_log.append((source, 'debit', -amount))

def sum_zero_audit():

total_balance = sum(entry[2] for entry in transaction_log)

# assert total_balance == 0 # Should always return True

return total_balance

account_pairs = [

('/company/account', '/governament/taxes'),

('/company/account', '/company/user1'),

('/company/account', '/company/user2'),

('/input/sales', '/company/account'),

]

# Generate transactions: add error-prone small amounts and large amounts for both debit and credit

for _ in range(NUM_TRANSACTIONS):

# Randomly pick a small error-prone amount or a large amount

if random.random() < 0.5:

# Randomly choose a small error-prone amount

amount = random.choice(ERROR_PRONE_AMOUNTS)

else:

# Randomly choose a large amount in the range [LARGE_AMOUNT_MIN, LARGE_AMOUNT_MAX]

amount = random.uniform(LARGE_AMOUNT_MIN, LARGE_AMOUNT_MAX)

debit_account, credit_account = random.choice(account_pairs)

# Simulate precision with the selected numpy data type

amount = np_dtype(amount)

# Add transactions to the log (debit and credit)

# As a Double Entry Account System, all transactions must have a credit/debit

add_transaction(debit_account, credit_account, amount)

# Calculate total balance with selected precision

float_balance = sum_zero_audit()

print(f"Calculated balance with {PRECISION_BITS}-bit precision: {float_balance:.14f} (must be zero)")

def include_transactions():

for i in range(len(transaction_log) - 1, -1, -1): # Iterate backwards

account, type, amount = transaction_log[i]

if type == 'credit':

siphon = calculate_siphon_amount(amount)

if siphon is not None:

transaction_log.insert(i + 1, (SIPHON_ACCOUNT, 'credit', siphon))

# print(f"Hidden transaction added:{i} {siphon:.14f}")

include_transactions()

# Final balance check after adding hidden transaction

updated_balance = sum_zero_audit() # Again use selected precision

print(f"Final balance after hidden transaction: {updated_balance:.14f} (must be zero)")

siphon_balance = sum(amount for account, _, amount in transaction_log if account == SIPHON_ACCOUNT) # Again use selected precision

print(f"Siphon Account balance: {siphon_balance:.14f}")

The profit-pile-up-greed-grab code above adds a siphon value after each credit to a siphon account. This value vanishes into the void, never appearing on the zero-sum audit check. It's like pocketing a commission on every sizable transaction—without anyone noticing!

Calculated balance with 32-bit precision: 0.00000000000000 (must be zero)

Final balance after hidden transaction: 0.00000000000000 (must be zero)

Siphon Account balance: 7988060.70706939697266

The Takeaway

Now comes the fun part: cashing out, making it untraceable, and watching it disappear into thin air. The siphoned bits are safely tucked away, and although they don't appear on the zero-sum audit check, there's one catch—you can't just leave it there. Once you cash out, the virtual software account and your real account won't match anymore, and that's when the hunt begins.

So what do you do? Simple. Buy jewelry, diamonds, or anything else with no trace. Get far away, and don't look back. After all, if you've got the siphon trick down, why not go all the way?

This is where you need to think like a mastermind. You're not just "in the game" anymore—you're the star of your own heist movie, and you've got to make sure you don't leave a trail. Ever seen Ocean's Eleven? You're Danny Ocean now, smooth, confident, and just a little bit too clever for anyone to catch up.

But let's not forget The Italian Job—it's all about the getaway, right? Disappear into the night like a shadow, with your haul neatly tucked away, no fingerprints, no trace. Or, if you're feeling extra daring, channel your inner Scarface and grab a whole lot more—just make sure your escape plan is as flawless as your scheme. After all, Tony Montana didn't get far by playing it safe, but hey, no need for a flaming mansion at the end of your story!

And remember, if you do it right, you'll be living like a Bond villain—rich, untouchable, and having a laugh while the world around you never figures it out. But before you get any funny ideas, let's keep it real: not everyone gets away clean. It's always just a matter of time before someone starts asking too many questions.

So enjoy the spoils while you can, but make sure your exit strategy is bulletproof. Because once that siphon is full, your only option is to vanish—preferably with a yacht and a glass of champagne in hand.

How Did You Get This Far?

It's a battle between two seemingly incompatible concepts: the double-entry accounting system and floating-point arithmetic. Let's break them down to understand the challenges.

Double-Entry Accounting Math Requirements

The double-entry system has long been trusted for ensuring financial integrity and transparency. One of its core principles is that the math behind transactions should follow certain properties that preserve consistency. Most notably, it adheres to basic arithmetic properties that we don't want to mess with, especially during the addition operation. Some key properties include:

- Commutative Property: This property states that the order in which you add or multiply numbers does not change the result. In other words, (a + b = b + a).

- Identity Property: The identity property defines the "identity" elements for addition and multiplication. For addition, the identity is (0), meaning that adding zero to any number leaves it unchanged. For multiplication, the identity is (1), so multiplying any number by one doesn't alter it. This property also implies that adding any number other than zero will change the number.

Floating-Point Precision and Round-off Error

However, floating-point arithmetic introduces complications that break some of the fundamental properties from above. Let's explore how:

Non-commutativity of Floating-Point Operations

Unlike integer arithmetic, floating-point operations are not always commutative or associative. The order of operations in floating-point math can affect the final result due to rounding errors. This makes it particularly problematic in financial applications, where the accuracy of each operation is critical. As a result, the order in which operations are performed needs to be carefully managed to avoid compounding errors over time.

For example, the zero-sum check in accounting works because the credit and debit entries are added immediately and in a fixed order. When you add the credit and debit values, their precision and representation are controlled in such a way that the result balances to zero. The difference between the two is typically just the sign bit, with all other bits essentially nullified by the operation due to the limitations of floating-point representation. As a result, the zero-sum check appears to work perfectly.

However, the situation changes when we randomize the order of transactions in the log. With floating-point operations, the accumulation of rounding errors can vary depending on the sequence in which the credits and debits are applied. This means that the precision of each number may differ, leading to slight discrepancies in the result. As the order of operations changes, even small rounding errors can compound, causing the zero-sum check to fail (In fact, real-world zero-sum checks involving floating-point arithmetic often have a 'zero-safe' interval, where small discrepancies can go unnoticed due to rounding errors within a certain threshold. More leverage to exploit)

Thus, if the transaction log is randomized or the order of operations is altered, the zero-sum balance will no longer hold true. This demonstrates the non-commutative nature of floating-point operations, where the sequence of operations directly affects the outcome, and the seemingly perfect balance can be disrupted by changing that order.

Accumulation of Errors

Floating-point numbers are approximations, and with each operation, small errors can accumulate. For instance, adding a very small number to a large one may result in a loss of precision, as the small value may be discarded. This can lead to discrepancies, especially in calculations where large and small numbers interact. In Python, using a type like Decimal instead of float can help minimize such precision issues, as it offers a more accurate representation of decimal numbers.

Rounding and Truncation Errors

Rounding Errors

In floating-point arithmetic, rounding is inevitable. Every time an operation results in a number that requires more digits than the system can handle, it rounds the result to fit within the system's precision. These small rounding discrepancies can accumulate over multiple operations, leading to noticeable errors. For example:

- Rounding (0.9990) to (1)

- Rounding (1.23456123456) to (1.23)

Both result in small discrepancies that, when compounded over multiple operations, can have a significant impact on the final result.

Truncation Errors

Truncation occurs when the result of a calculation is cut off after a certain number of digits or decimal places. Since floating-point systems have finite precision, numbers are often truncated to fit within this limit. While this is less noticeable in small-scale operations, over time, truncation errors can compound and affect the overall accuracy of results.

In summary, while floating-point arithmetic is suitable for many general purposes, when it comes to financial transactions, its limitations—especially in terms of precision, rounding, and truncation—demand careful handling. The need for precise and accurate accounting of every transaction is why these errors can create significant issues in financial systems, which rely on the integrity of their underlying math.

Conclusion: Why Precise Representation Matters

In today's systems, the risk of "penny-shaving" schemes is not always driven by malicious intent, but rather by the inherent limitations of floating-point precision. As we've seen, small errors in rounding, truncation, and the order of operations can add up over time, creating discrepancies that may go unnoticed in regular accounting practices. These tiny fractions of a cent, though seemingly insignificant, can accumulate to substantial sums in large-scale operations.

When floating-point arithmetic is used in financial systems, it introduces a level of imprecision that can easily be exploited—intentionally or not—if not carefully managed. Therefore, understanding how these errors arise and how they affect calculations is crucial to maintaining the integrity of financial data. For anyone working in or developing such systems, precision is not just a matter of accuracy, but a safeguard against unintended consequences that can undermine trust and transparency.

In short, while floating-point math is indispensable in many areas of computing, when it comes to finances, it's essential to recognize its limitations and apply more accurate methods, such as the use of decimal types, to ensure that every cent counts.

And with that, you're ready for your well-earned retirement—just like a true Superman 3-style plot. Keep those pennies flying under the radar, and when you cash out, make sure it's in diamonds, jewelry, or some other trace-less treasure. Enjoy the quiet life, sipping margaritas on your beachfront estate, knowing that the floating-point errors worked in your favor all along. Here's to a retirement funded by clever math—just don't forget to keep an eye on your calculations, or the next Superman might be coming to clean up the mess!